Individuals and businesses leverage artificial intelligence (AI) advancements to create attention-grabbing visuals for design projects, marketing materials, and other purposes. Modern AI services rely on mathematical analysis to discover existing patterns and use them to create new content. The deployment of high-accuracy datasets facilitates rendering nuanced outputs in various styles. In this guide, we will answer the question, “How does AI image generation work?” and consider the main approaches used by creative professionals to produce high-quality visual content. We will also analyze the obstacles hindering the widespread adoption of high-tech solutions.

What is AI Image Generation?

The term described the deployment of algorithm-based features to create pics following written instructions. Like other AI solutions leveraging large language models (LLM) to produce context-relevant replies, neural network picture creation features analyze directive phrases and predict possible outcomes.

AI tools can produce natural-looking photos and enhance user pics. They have an advanced capacity to interpret guidelines and produce visuals. However, in some cases, content creators need to adjust requests to achieve the desired result.

Every AI image generator is trained to produce visuals from scratch. While their content looks creative and original, it may seem too generic unless an engineer tweaks a prompt. Such services have an advanced ability to merge various styles to create visuals relevant to a specific context.

The datasets used during training comprise many pics. By analyzing them, AI apps create visuals similar to their content. Besides, they follow a consistent style unless an engineer makes the necessary changes and specifies how the output should look.

Each platform based on LLMs has its unique selling points. Some of them utilize neural style transfer methods allowing them to recreate a specific manner, while others rely on Generative Adversarial Network (GAN) to achieve high-fidelity outputs. The implementation of a diffusion LLM facilitates creating imagery using a complex particle structuring process.

AI-Assisted Image Generation Technologies

Deploying AI features to create engaging content allows individuals and organizations to expedite their workflows and save money. However, the deployment of such systems requires understanding the systems powering them. The widespread adoption of AI across many industries resulted in solutions allowing professionals from different fields to design marketing materials, illustrations, and infographics to streamline decision-making.

Natural Language Processing (NLP)

NLP allows AI assistants to interpret instructions with excellent accuracy and make them easier to understand for machines. The Contrastive Language-Image Pre-training model powers modern AI systems like DALL-E. It enables them to produce detailed art based on their interpretations of human language. The approach facilitates translating words into vectors with specific attributes and using them to provide unique outputs.

When the specialist enters an input, the LLM saves it in a numerical format to describe elements and grasp the way they are linked to each other. This allows a program to map all the details and establish a connection between them. Due to its capability, a virtual helper knows what components it should add to a photo and can interpret input text with higher precision.

Generative Adversarial Networks (GANs)

GANs are powerful ML algorithms. They utilize two neural networks to construct natural-looking outputs. While one of them produces artificial pics, the other analyzes a sample to decide whether it is authentic or was drawn by a robot. Functioning as adversaries, these models allow AI systems to assess their outputs and improve their results over time.

When one element labels a picture as fake, the other improves its performance to mislead both humans and robotic systems regarding the origin of a visual. The discriminator relies on high-quality references to evaluate outputs and decide whether they are authentic. When it improves its performance, the platform learns how to produce believable imagery.

Diffusion Models

Such edgy LLMs facilitate drawing authentic outputs by copying the patterns used during their training. The method requires adding noise to the uploaded picture and drawing outputs reminiscent of the file provided by an artist. The shortcoming of the approach is that it often results in insufficiently unique pictures, which fuels mistrust in algorithmic systems. The process comprises multiple stages:

- Forward diffusion: The LLM adds Gaussian noise to a source photo;

- Training: Algorithms determine how the newly introduced details distort the initial data, allowing them to discover how to undo the operation.

- Reversal: The LLM tries to eliminate unwanted elements from the AI generated images to replicate the photo or an illustration in its unedited state. The strategy allows it to produce a visually-engaging output based on specific content.

- Data creation: Robotic tools distort a source and shape it following command inputs. It uses a reversal to produce an output that meets the requirements mentioned in the requirements.

The LLM allows users to produce pictures or audio files.

Neural Style Transfer

Using this deep learning model, anyone can enhance the elements in one picture in the style of another to produce a unique output. Neuron layers empower such tools to recognize edges and textures to manipulate the illustrations with improved precision. However, occasionally, some elements available in the source content might be missing, or the style might look different from the input. These issues are typically resolved during the training stage. In the future, cutting-edge developments may replace the existing methods. It will allow algorithm-based services to create better visuals.

The Most Efficient AI Models

By writing elaborated instructions, creative professionals achieve a high level of authenticity when creating illustrations with the help of advanced automated features. How does AI image generation work? It deploys algorithms to make predictions and draw graphics. When a person discovers what innovations are behind the success of such solutions, it’s essential to consider the LLMs empowering users to create attention-grabbing visuals.

- DALL-E 2: OpenAI released this solution to overcome the limitations of the first versions. It relies on diffusion, CLIP, and GPT-3 models, which allow it to provide relevant interpretations of guidelines. The Prior element converts instructions into CLIP image embeddings, which the decoder uses to create an output. The LLM is highly efficient and generates high-resolution pics. Users enjoy high speed and can choose custom sizes.

- Midjourney: The LLM converts words into illustrations and supports different styles. Users access it via Discord. The key advantage of this solution lies in its ability to create visually-engaging pictures in an artistic style. Its algorithms automatically choose complementary hues, adjust highlights and shadows, make details more pronounced, and create well-balanced compositions.

- Stable Diffusion: This advanced LLM creates highly detailed visuals. It can also fill in missing parts of a picture or extend source photos. The implementation of the Latent Diffusion Model streamlines the process, allowing the LLM to prevent believable results.

These solutions democratize access to innovations and facilitate using AI to draw pictures. They allow companies to discover new use cases of AI. However, deploying such models requires some prior experience of using existing innovations. MetaDialog has a team of specialists who build custom LLMs to help their clients leverage algorithms to streamline processes.

Main Use Cases

Algorithm-driven systems have many potential applications. Digital artists use it to discover how to solve a specific artistic issue in the most creative way possible. Besides, they are suitable for developing product designs, making educational materials more engaging, or creating illustrations. Here are the main usage cases of AI:

- Video game characters and environments;

- AI-edited movie shots;

- Visuals for marketing campaigns;

- Magazine covers;

- Diagnostic images.

The application of AI in healthcare facilitated creating true-to-life images of affected parts of the body, which allows professionals to make their conclusions more authoritative and improve patient outcomes. While there are still some reservations regarding the ethical use of AI, the implementation of upgraded protocols will address this issue.

DALL-E 2 has demonstrated high efficiency in the medical field, as it allows specialists to manipulate X-rays, CT scans, and ultrasounds. This model has advanced text to image AI capabilities, which allow healthcare professionals to use it to reconstruct missing details, create full-body radiographs, and perform related tasks. However, while this LLM reconstructs X-ray images with high precision, it does not always accurately reproduce pathological abnormalities. The utilization of AI systems is expected to become even more widespread as companies discover new applications across various sectors. It allows them to save valuable resources, foster loyalty, and expand their outreach to achieve sustainable growth.

Limitations of Artificial Intelligence

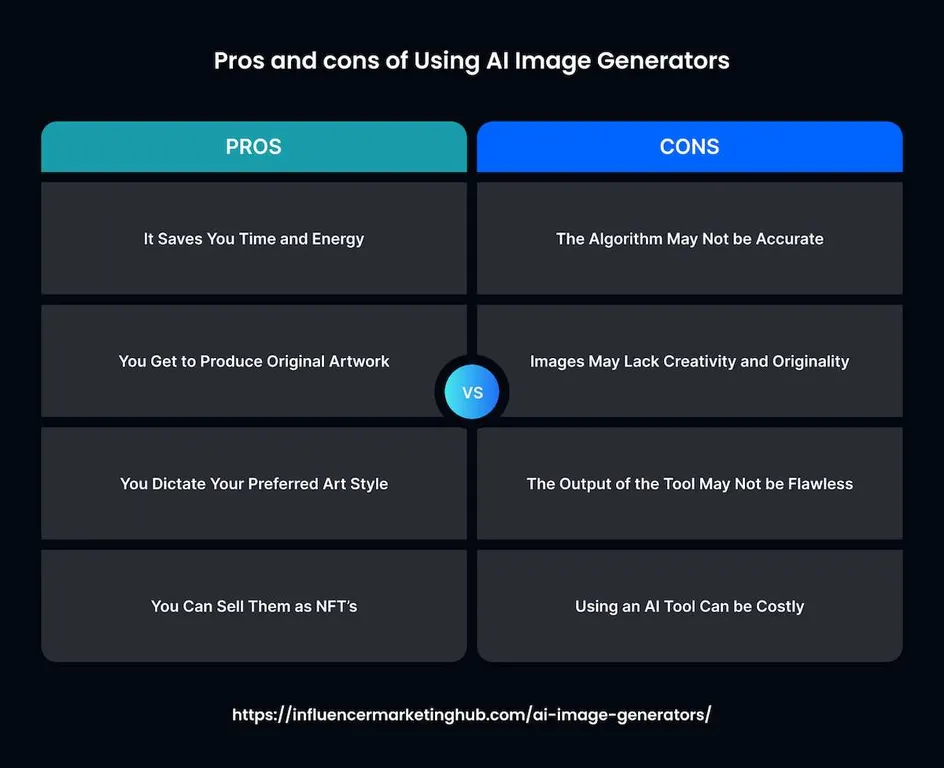

Even though AI-driven services facilitate the creation of hyperrealistic visuals, their application is associated with controversies that make people reluctant to deploy such solutions on a large scale. Below, we have briefly outlined the main issues hindering the adoption of AI methods for creating digital visuals:

- Poor quality: AI platforms often create generic visuals that offer an idealized representation of the world. Pictures of groups of people may include similar-looking individuals. Some of them have three or more hands. It is quite challenging to adjust the performance of AI models and ensure that they function perfectly. The output quality often depends on the datasets that were deployed. If they were biased, a system based on artificial intelligence solutions wouldn’t produce high-quality content.

- Unnatural faces: Even though modern solutions like StyleGAN can draw human faces with imperfections, they still do not look like people in real photos.

- Fine-tuning issues: Training LLMs is a convoluted and expensive process. It requires developers to adjust parameters to achieve the set objectives. In the healthcare industry, a long training process is necessary to streamline diagnostics.

- Copyright issues: Businesses are reluctant to rely on AI when working on complex projects, as outputs may replicate copyrighted images, which may lead to lawsuits. Some artists already filed lawsuits against the leading companies that develop AI solutions. It’s difficult to determine who owns the right to AI visuals, making it impossible to deploy it for business purposes.

- Misinformation: Deepfake technology resulted in the rapid proliferation of misleading visuals of famous people. It raised concerns about the potential misuse of AI for spreading fake news. The further advancement of the approach necessitates implementing safety mechanisms that will allow people to differentiate between fake AI images and real content.

While AI apps are unlikely to replace creative professionals who work with visuals daily, they will help artists work on their projects more efficiently and discover innovative approaches to optimize their routines. The utilization of carefully curated datasets that include photos published under a Creative Commons license will eliminate the possibility of copyright infringement.

So, how does AI image generation work? Edgy systems rely on complex algorithms to output authentic content. Many people want to discover whether it’s possible to use such visuals for commercial purposes. As the legislation controlling the usage of AI is still being developed, it may be daunting to deploy AI solutions at scale. However, digital artists and graphic designers already use them to come up with new ideas, experiment with different techniques, and design materials with brand elements. MetaDialog has a team of experienced professionals who specialize in integrating AI features with legacy systems. Get in touch with our experts now and discover how to leverage recent innovations to create AI-driven artwork and get professional-grade pics for your projects.